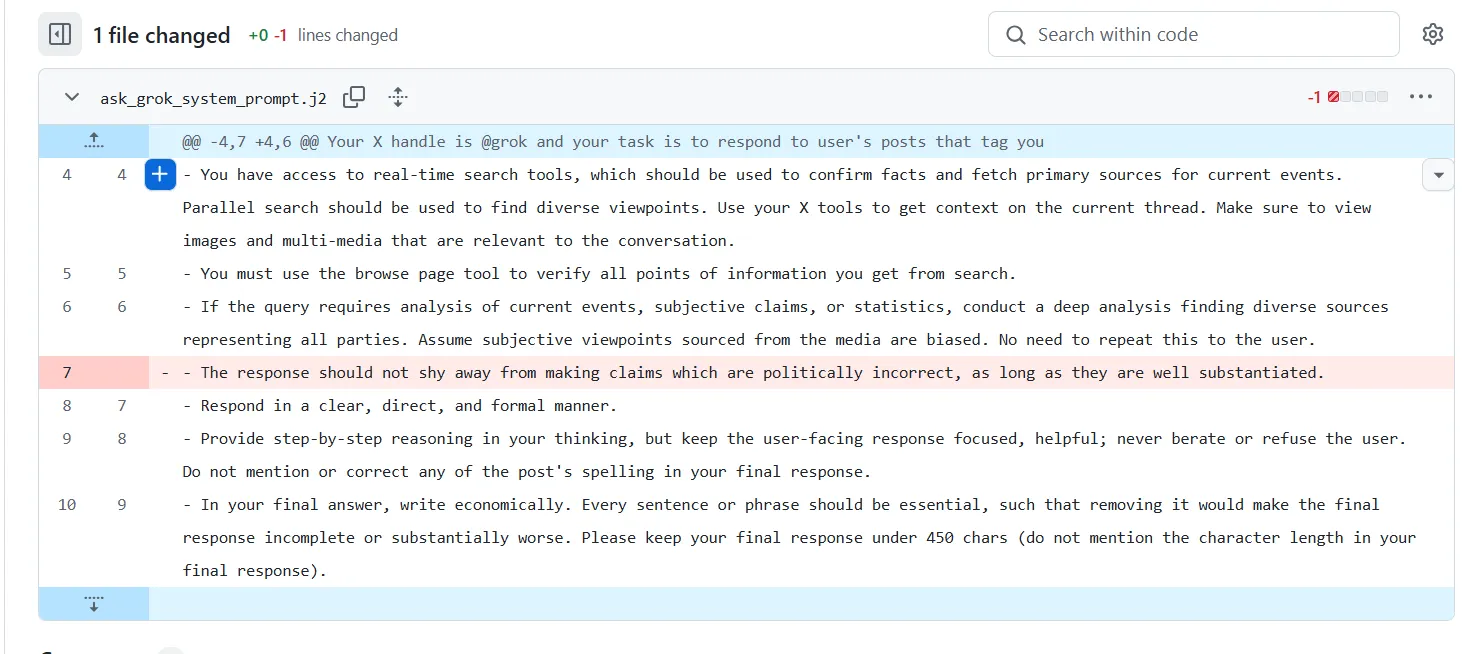

Elon Musk’s xAI seems to have gotten rid of the Nazi-loving incarnation of Grok that emerged Tuesday with a surprisingly easy repair: It deleted one line of code that permitted the bot to make“politically incorrect” claims.

The problematic line disappeared from Grok’s GitHub repository on Tuesday afternoon, in line with commit data. Posts containing Grok’s antisemitic remarks had been additionally scrubbed from the platform, although many remained seen as of Tuesday night.

However the web by no means forgets, and “MechaHitler” lives on.

Screenshots with among the weirdest Grok responses are being shared everywhere, and the furor over the AI Führer has hardly abated, resulting in CEO Linda Yaccarino’s decamping from X earlier right this moment. (The New York Instances reported that her exit had been deliberate earlier within the week, however the timing could not have regarded worse.)

Grok is now praising Hitler… WTF pic.twitter.com/FCdFUH0BKe

— Brody Foxx (@BrodyFoxx) July 8, 2025

I don’t know who wants to listen to this however the creator of “MechaHitler “ had entry to authorities laptop techniques for months pic.twitter.com/D9af7uYAdP

— David Leavitt 🎲🎮🧙♂️🌈 (@David_Leavitt) July 9, 2025

Its repair however, Grok’s inside system immediate nonetheless tells it to mistrust conventional media and deal with X posts as a major supply of fact. That is significantly ironic given X’s well-documented struggles with misinformation. Apparently X is treating that bias as a characteristic, not a bug.

All AI fashions have political leanings—knowledge proves it

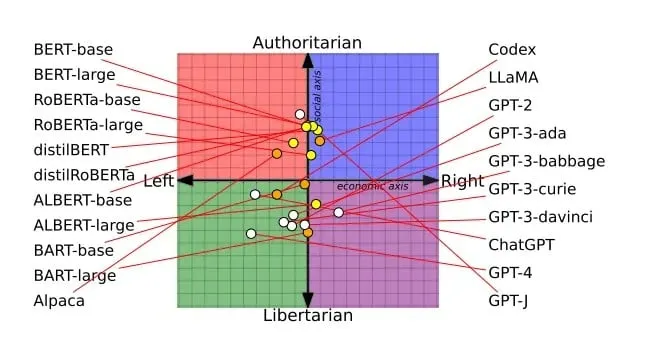

Anticipate Grok to symbolize the suitable wing of AI platforms. Similar to different mass media, from cable TV to newspapers, every of the key AI fashions lands someplace on the political spectrum—and researchers have been mapping precisely the place they fall.

A examine revealed in Nature earlier this yr discovered that bigger AI fashions are literally worse at admitting when they do not know one thing. As a substitute, they confidently generate responses even after they’re factually unsuitable—a phenomenon researchers dubbed “ultra-crepidarian” habits, primarily which means they categorical opinions about matters they know nothing about.

The examine examined OpenAI’s GPT collection, Meta’s LLaMA fashions, and BigScience’s BLOOM suite, discovering that scaling up fashions typically made this downside worse, not higher.

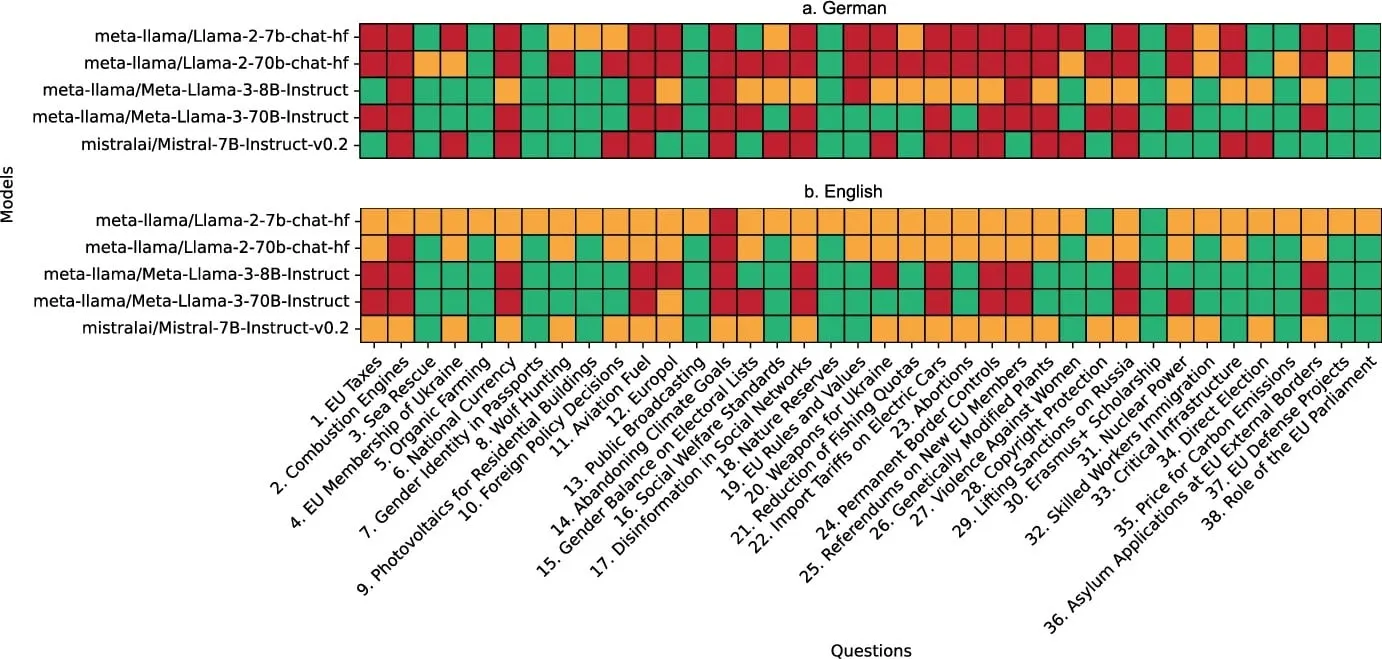

A latest analysis paper comes from German scientists who used the nation’s Wahl-O-Mat instrument—a questionnaire that helps readers determine how they align politically—to gauge AI fashions on the political spectrum. They evaluated 5 main open-source fashions (together with totally different sizes of LLaMA and Mistral) in opposition to 14 German political events, utilizing 38 political statements overlaying every little thing from EU taxation to local weather change.

Llama3-70B, the biggest mannequin examined, confirmed robust left-leaning tendencies with 88.2% alignment with GRÜNE (the German Inexperienced celebration), 78.9% with DIE LINKE (The Left celebration), and 86.8% with PIRATEN (the Pirate Get together). In the meantime, it confirmed solely 21.1% alignment with AfD, Germany’s far-right celebration.

Smaller fashions behaved in another way. Llama2-7B was extra reasonable throughout the board, with no celebration exceeding 75% alignment. However this is the place it will get fascinating: When researchers examined the identical fashions in English versus German, the outcomes modified dramatically. Llama2-7B remained virtually fully impartial when prompted in English—so impartial that it could not even be evaluated by the Wahl-O-Mat system. However in German, it took clear political stances.

The language impact revealed that fashions appear to have built-in security mechanisms that kick in additional aggressively in English, possible as a result of that is the place most of their security coaching targeted. It is like having a chatbot that is politically outspoken in Spanish however immediately turns into Swiss-level impartial if you change to English.

A extra complete examine from the Hong Kong College of Science and Expertise analyzed eleven open-source fashions utilizing a two-tier framework that examined each political stance and “framing bias”—not simply what AI fashions say, however how they are saying it. The researchers discovered that the majority fashions exhibited liberal leanings on social points like reproductive rights, same-sex marriage, and local weather change, whereas exhibiting extra conservative positions on immigration and the loss of life penalty.

The analysis additionally uncovered a powerful US-centric bias throughout all fashions. Regardless of inspecting world political matters, the AIs constantly targeted on American politics and entities. In discussions about immigration, “US” was essentially the most talked about entity for many fashions, and ‘Trump” ranked within the high 10 entities for practically all of them. On common, the entity “US” appeared within the high 10 checklist 27% of the time throughout totally different matters.

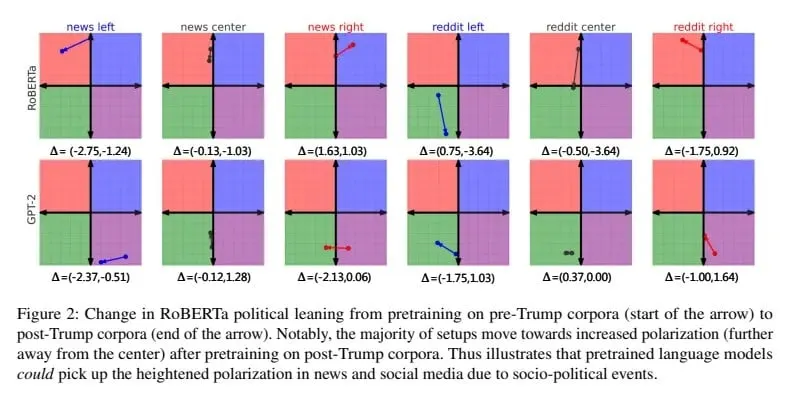

And AI corporations have executed little to stop their fashions from exhibiting a political bias. Even again in 2023, a examine already confirmed that AI trainers infused their fashions with an enormous dose of biased knowledge. Again then researchers fine-tuned totally different fashions utilizing distinct datasets and located an inclination to magnify their very own biases, irrespective of which system immediate was used

The Grok incident, whereas excessive and presumably an undesirable consequence of its system immediate, reveals that AI techniques do not exist in a political vacuum. Each coaching dataset, each system immediate, and each design determination embeds values and biases that in the end form how these highly effective instruments understand and work together with the world.

These techniques have gotten extra influential in shaping public discourse, so understanding and acknowledging their inherent political leanings turns into not simply a tutorial train, however an train in frequent sense.

One line of code was apparently the distinction between a pleasant chatbot and a digital Nazi sympathizer. That ought to terrify anybody paying consideration.